Hamiltonian Mechanics

2 Conservation Laws

2.1 Noether theorem

In this section we go deeper into the appearance of conservation laws in the lagrangian formulation and, in particular, a beautiful and important theorem due to Emmy Noether relating conserved quantities to symmetries.

Later on, once we introduce hamiltonian mechanics, we will come back to the study of symmetries and conservation laws in a more sophisticated way.

We have already seen that, when the lagrangian is not explicitly time dependent, the total energy is a conserved quantity. Can we expect more?

Let’s make another example before entering into the gist of the matter.

-

Example 2.1 (kinetic momentum). Consider a mechanical system with \(n\) degrees of freedom described by the lagrangian \(L=L(q,\dot q)\), \(q=(q^1,\ldots ,q^n)\). If there is \(i\) such that \(L\) does not depend on \(q^i\), i.e.

\(\seteqnumber{0}{2.}{0}\)\begin{eqnarray} \pdv {L}{q^i} = 0, \end{eqnarray}

then the coordinate \(q^i\) is said to be cyclic and the kinetic momentum

\(\seteqnumber{0}{2.}{1}\)\begin{eqnarray} p_j := \frac {\partial L}{\partial \dot q^j} \end{eqnarray}

is a conserved quantity.

This is easily verified by using Euler-Lagrange equations:

\(\seteqnumber{0}{2.}{2}\)\begin{eqnarray} \frac {\dd p_j}{\dd t} = \dv {t} \left (\frac {\partial L}{\partial \dot q^j}\right )= \frac {\partial L}{\partial q^j} = 0. \end{eqnarray}

There is no apparent obstacle that prevents us to expect other conserved quantities. However, before being able to give a precise statement we need to introduce some preliminary concepts.

Let \(M\) be a smooth manifold. A smooth map \(\phi : M \to M\) is called diffeomorphism if it is invertible and its inverse \(\phi ^{-1}\) is smooth. At each point \(q\in M\) we can define the differential of \(\phi \), i.e., the linear map \(\phi _*\) between tangent spaces (sometimes also denoted \(\dd \phi _q\))

\(\seteqnumber{0}{2.}{3}\)\begin{equation} \phi _*: T_q M \to T_{\phi (q)} M, \quad \phi _* v := \phi _*(q) v := \dv {t} (\phi \circ \gamma ) \Big |_{t=0}, \quad v\in T_q M, \end{equation}

where \(\gamma :(-\epsilon , \epsilon )\subset \mathbb {R} \to M\) is a smooth curve such that \(\gamma (0)=q\), \(\gamma '(0) = v\). On a euclidean space, for instance, the simplest such curve is \(\gamma (t) = q + v t\).

In local coordinates, the matrix of the linear map \(\phi _*\) coincides with the Jacobian matrix

\(\seteqnumber{0}{2.}{4}\)\begin{equation} \phi _*(q) = \dd \phi _q = \left (\frac {\partial \phi ^i(q)}{\partial q^j}\right )_{1\leq i,j\leq n}. \end{equation}

The differential can be used to push tangent vectors on \(M\) forward to tangent vectors on \(\phi (M)\), which is why it is also called pushforward.

Let \(L = L(q, \dot q)\), \((q, \dot q)\in TM\), be a lagrangian on the tangent bundle of a smooth manifold \(M\). From now on, we will denote with \((M, L)\) the mechanical system described by such lagrangians \(L\) on the configuration space \(M\).

A diffeomorphism \(\Phi : M \to M\) is called a symmetry of the mechanical system \((M,L)\) if \(L\) is invariant1 with respect to \(\Phi \), i.e.2

\(\seteqnumber{0}{2.}{5}\)\begin{equation} \label {eq:symmetry} \Phi ^* L (q,\dot q) := L\left (\Phi (q), \Phi _*\dot q\right ) = L(q,\dot q) \qquad \forall (q,\dot q) \in TM. \end{equation}

Note that in [Arn89, Chapter 4] terminology, the above definition would instead be that \((M,L)\) admits the mapping \(\Phi \).

A fundamental property of symmetries of mechanical systems is summarized by the following theorem.

1 The operation \(\Phi ^*:C^\infty (TM) \to C^\infty (TM)\) defined here is a special case of a more general operation, called pullback, which we will see again in different settings in future chapters.

2 Remember that \((q,\dot q)\in TM\) means, in particular, that \(q\in M\) and \(\dot q \in T_q M\) belongs to the corresponding tangent space at \(q\).

-

Theorem 2.1. Let \(\Phi \) be the symmetry of a mechanical system. If \(q(t)\) is a solution of the Euler-Lagrange equations, then \(\Phi (q(t))\) satisfies the same equations.

The converse is generally not true: i.e., in general it is not true that if \(\Phi \) maps solutions to solutions, then \(\Phi \) is a symmetry.

We are almost there. Before being able to introduce Noether theorem we need one last definition.

A one–parameter family of diffeomorphisms

\(\seteqnumber{0}{2.}{6}\)\begin{equation} \Phi _s : M \to M \end{equation}

is called one–parameter group of diffeomorphisms if the following hold:

-

• \(\Phi _0 = \Id \);

-

• \(\forall s, t \in \mathbb {R}, \quad \Phi _s(\Phi _t(q)) = \Phi _{s+t}(q)\quad \forall q\in M\).

These two properties give away the inverse of \(\Phi _s\): \((\Phi _s)^{-1} = \Phi _{-s}\).

-

Remark 2.1. To any one–parameter group of diffeomorphism we can associate a smooth vector field \(X : q\in M \mapsto X(q)\in T_qM\) by

\(\seteqnumber{0}{2.}{7}\)\begin{equation} X(q) := \dv {s} \Phi _s(q) \Big |_{s=0}. \end{equation}

This is clearly seen for small values of \(|s|\), in which case the diffeomorphism \(\Phi _s\) acts in coordinates as

\(\seteqnumber{0}{2.}{8}\)\begin{equation} \label {eq:infinitesimalSymmetryExp} q \mapsto \Phi _s(q) = q + s X(q) + O(s^2). \end{equation}

Moreover, the inverse relation also holds: given a smooth vector field \(X(q)\), we can reconstruct a one–parameter group of diffeomorphisms (at least for sufficiently small values of \(s\)) as follows. Let \(Q(s, q)\) be the solution of the initial value problem

\(\seteqnumber{0}{2.}{9}\)\begin{equation} \label {eq:NoetherCoords} \frac {\dd Q}{\dd s} = X(q), \quad Q(0, q) = q. \end{equation}

Assume that there exist \(\epsilon >0\) such that for all \(|s|<\epsilon \) the solution \(Q(s,q)\) exists for every \(q\in M\). Then, the map \(\Phi _s:M\to M\) is defined for \(|s|<\epsilon \) by

\(\seteqnumber{0}{2.}{10}\)\begin{equation} \label {eq:reconstructX} \Phi _s(q) := Q(s, q). \end{equation}

-

Exercise 2.1. Show that \(\Phi _s\) as defined in (2.11) is a one–parameter group of diffeomorphisms on \(M\) for \(s,t \in (-\epsilon ,\epsilon )\) such that \(|s + t| < \epsilon \).

For a more thorough account of these topics, and to understand how this relates to the concept of infinitesimal generators of group actions, refer to [J.M13, Chapters 9 and 20] or [Ser20, Chapters 3 and 4].

-

As one can expect, if all \(\Phi _s\) in a one-parameter group of diffeomorphisms are also symmetries of some lagrangian \(L\) on a manifold \(M\), we call \(\Phi _s\) a one-parameter group of symmetries for \((M,L)\). We can finally state Noether3 theorem!

-

Theorem 2.2 (Noether theorem). Given a one–parameter group of symmetries \(\Phi _s: M \to M\) of the mechanical system \((M,L)\), then there exists an integral of motion \(I: TM \to \mathbb {R}\). In local coordinates \(q\) on \(M\), the integral \(I\) takes the form

\(\seteqnumber{0}{2.}{11}\)\begin{equation} I(q,\dot q) := p_i X^i, \quad p_i(q, \dot q) := \pdv {L}{\dot q^i}, \quad X(q) := \dv {s} \Phi _s(q) \Big |_{s=0}. \end{equation}

Although I prefer the presentation of the proof in [Arn89, Chapter 20.B], I will give here one with a slightly different perspective.

-

Proof. By definition, see (1.82), \(I\) is a first integral if it is constant on the phase curves. Let’s compute its time derivative,

\(\seteqnumber{0}{2.}{12}\)\begin{align} \dv {t} I(q, \dot q) & = \dv {t} p_i(q, \dot q) X^i(q) \\ & = \frac {\dd p_i}{\dd t} X^i + p_i \frac {\partial X^i}{\partial q^j} \dot q^j \\ & = \left (\dv {t}\pdv {L}{\dot q^i}\right ) X^i + p_i \frac {\partial X^i}{\partial q^j} \dot q^j \\ & = \pdv {L}{q^i} X^i + p_i \frac {\partial X^i}{\partial q^j} \dot q^j. \label {eq:ntref1} \end{align} The last equality follows from the Euler-Lagrange equations.

We are left to show that one of the equations appearing above vanishes, and we have not yet used that we have a one–parameter group of symmetries. Using (2.9), we can rewrite the symmetry relation (2.6) for small enough values of \(s\) as

\(\seteqnumber{0}{2.}{16}\)\begin{equation} \label {eq:invariance} L\left ( q + s X(q) + O(s^2), \dot q + s \frac {\partial X}{\partial q} \dot q + O(s^2) \right ) = L(q, \dot q). \end{equation}

Expanding the left hand side in series, simplifying the term \(L(q, \dot q)\) and sending \(s\to 0\), the condition becomes

\(\seteqnumber{0}{2.}{17}\)\begin{equation} \label {eq:ntref2} \pdv {L}{q^i} X^i + p_i \frac {\partial X^i}{\partial q^j} \dot q^j = 0. \end{equation}

Which shows that (2.16) is \(0\) and, thus, that \(I\) is a first integral. □

-

Example 2.2 (kinetic momentum - reprise). Let’s revisit Example 2.1. For a cyclic coordinate \(q^i\), the lagrangian is invariant with respect to the one–parameter group of translations along the \(q^i\) direction:

\(\seteqnumber{0}{2.}{18}\)\begin{equation} \Phi _s(q^1, \ldots , q^i, \ldots , q^n) = (q^1, \ldots , q^i + s, \ldots , q^n). \end{equation}

The corresponding vector field \(X\) is constant: \(X^j(q) = \delta ^j_i\), it is \(1\) in the \(i\)-th component and zero everywhere else. By Noether theorem, the conserved quantity is

\(\seteqnumber{0}{2.}{19}\)\begin{equation} I(q,\dot q) = p_j X^j = p_j \delta ^j_i = p_i, \end{equation}

as expected.

-

Remark 2.2. Noether theorem is a more general result related to symmetries in classical field theories than what we presented here. The proof of the more general Noether theorem is obtained by reducing the system to a system with one cyclic variable. This can be done by locally rectifying4 the vector field \(X\) on \(M\) and observing that, by Exercise 1.7, the sum \(p_i X^i\) does not depend on the choice of coordinates on \(M\).

4 See [Ser20, Chapter 3.5].

-

Exercise 2.2. Assume that the one–parameter group of diffeomorphisms \(\Phi _s\) is not a symmetry for \((M,L)\) in the sense of our definition, but preserves the action \(S\) associated to the lagrangian. In that case, the invariance condition (2.17) holds only up to total derivatives [GF00, Chapter 4.20], i.e., there is a function \(f(q)\) on \(M\) such that

\(\seteqnumber{0}{2.}{20}\)\begin{equation} L\left ( q + s X(q), \dot q + s \frac {\partial X}{\partial q} \dot q\right ) = L(q, \dot q) + s \dv {t}f(q) + O(s^2). \end{equation}

Show that in this case the first integral of the system would be

\(\seteqnumber{0}{2.}{21}\)\begin{equation} \widetilde I(q, \dot q) = p_i X^i - f(q). \end{equation}

-

Remark 2.3. There are also discrete symmetries in nature which don’t depend on a continuous parameter. The so-called parity, for example, is the invariance under reflection, \(\vb *{x} \mapsto -\vb *{x}\). These types of symmetries give rise to conservation laws in quantum physics but not in classical physics.

Let’s now look at more and less immediate consequences of our discovery, starting from some of the constants of motion that we have already encountered.

2.1.1 Homogeneity of space: total momentum

Consider a system of \(N\) particles with lagrangian (1.59),

\(\seteqnumber{0}{2.}{22}\)\begin{equation} L = \frac 12 \sum _{k=1}^N m_k \dot {\vb *{x}}_k^2 - U(\vb *{x}_1, \ldots , \vb *{x}_N), \end{equation}

which is invariant with respect to translations

\(\seteqnumber{0}{2.}{23}\)\begin{equation} \Phi _s(\vb *{x}_1, \ldots , \vb *{x}_N) := (\vb *{x}_1 + s \hat {\vb *{x}},\ldots , \vb *{x}_N + s \hat {\vb *{x}}), \end{equation}

for any direction \(\hat {\vb *{x}}\), \(|\hat {\vb *{x}}|=1\) and all \(s\in \mathbb {R}\). I.e.5

\(\seteqnumber{0}{2.}{24}\)\begin{equation} L(\vb *{x}_1, \ldots , \vb *{x}_N, \dot {\vb *{x}}) = L(\vb *{x}_1 + s \hat {\vb *{x}}, \ldots , \vb *{x}_N + s \hat {\vb *{x}}, \dot {\vb *{x}}), \end{equation}

which is the statement that space is homogeneous and a translation of the system in some direction \(\hat {\vb *{x}}\) does nothing to the equations of motion. These translations are elements of the Galilean group that we met in Chapter 1.2.

We call total momentum the vectorial quantity

\(\seteqnumber{0}{2.}{25}\)\begin{equation} \vb *{P} = \sum _{k=1}^N m_k \dot {\vb *{x}}_k = \sum _{k=1}^N \vb *{p}_k. \end{equation}

As we saw in Example 2.2, Noether theorem implies that for any \(\hat {\vb *{x}}\), the projection of the total impulse on the direction \(\hat {\vb *{x}}\), which is

\(\seteqnumber{0}{2.}{26}\)\begin{equation} \langle \vb *{P}, \hat {\vb *{x}}\rangle = \sum _{k=1}^N \langle \vb *{p}_k, \hat {\vb *{x}}\rangle , \end{equation}

is a conserved quantity. Since \(\hat {\vb *{x}}\) is arbitrary, this means that also \(\vb *{P}\) is conserved.

This should be intuitively clear: one point in space is much the same as any other. So why would a system of particles speed up to get somewhere else, when its current position is just as good? This manifests itself as conservation of momentum.

This fact can be also interpreted in the following way: the sum of all forces acting on the point particles in the closed system is zero, i.e., Newton’s third law \(\sum _k \vb *{F}_k = 0\). Which, you have already figured out by solving Exercise 1.1.

5 It may seem here that we forgot to push forward \(\dot x\) by \(\Phi _s\), however the pushforward \((\Phi _s)_* = \Id \) by \(\Phi _s\) acts on \(\dot x\) as the identity.

-

Example 2.3 (The barycenter). Consider the galilean transformation \(t \mapsto t\), \(\vb *{x}_k \mapsto \vb *{x}'_k + \vb *{v}t\). The total momentum \(\vb *{P}\) transforms as

\(\seteqnumber{0}{2.}{27}\)\begin{equation} \vb *{P} = \vb *{P}' + \vb *{v} \sum _{k=1}^N m_k. \end{equation}

If the total momentum is zero with respect to some inertial reference frame (see Section 1.2.1), we say that the system is stationary in the reference frame. For a closed system, it is always possible to find an inertial frame of reference, called co-moving frame, in which the system becomes stationary. The speed of such special reference frame,

\(\seteqnumber{0}{2.}{28}\)\begin{equation} \vb *{v_T} = \frac {\vb *{P}}{\sum _{k=1}^N m_k} = \frac {\sum _{k=1}^N m_k \dot {\vb *{x}}_k}{\sum _{k=1}^N m_k}, \end{equation}

can be thought of as the total speed of the mechanical system. A rewriting of the formula above gives6

\(\seteqnumber{0}{2.}{29}\)\begin{equation} \vb *{P} = M \vb *{v_T}, \quad M:= \sum _{k=1}^N m_k. \end{equation}

In other words, the total momentum of the system is equal to the product of the total mass and the total speed of the system. In view of this, we could reinterpret the total speed of the system, seen as a whole, as the speed of a virtual point particle with position

\(\seteqnumber{0}{2.}{30}\)\begin{equation} \vb *{X} = \frac {\sum _{k=1}^N m_k \vb *{x}_k}{M}. \end{equation}

This special point is called barycenter of the system.

Calling internal energy \(E_i\) of a system its total energy in the co-moving frame, the energy of the system with respect to another inertial reference frame is given by

\(\seteqnumber{0}{2.}{31}\)\begin{equation} E = E_i + \frac {M |\vb *{v_T}|^2}{2}, \quad M= \sum _{k=1}^N m_k. \end{equation}

-

Exercise 2.3. Consider, as in Example 1.7 and Exercise 1.2, a closed system of charged point particles in the presence of a uniform constant magnetic field \(\vb *{B}\). Define

\(\seteqnumber{0}{2.}{32}\)\begin{equation} \widetilde {\vb *{P}} := \vb *{P} + \frac {e}c \vb *{B}\wedge \vb *{X}, \end{equation}

where \(\vb *{P}\) is the total momentum of the system when \(\vb *{B} = 0\) and \(\vb *{X}\) is the sum of the particle positions, you can think of it as some kind of barycenter. Show that for such system, \(\widetilde {\vb *{P}}\) is a conserved quantity.

2.1.2 Isotropy of space: angular momentum

The isotropy of space is the statement that a closed system is invariant under rotations around a fixed axis. To work out the corresponding conserved quantities one can work directly with the infinitesimal form of the rotations.

Let’s first look at the invariants related to isotropy: rotations around an axis in \(\mathbb {R}^3\) are identifiable with orthogonal transformation \(A\in SO(3)\), the special orthogonal group – orthogonal matrices \(A^TA = \Id \) with determinant \(1\), where we are denoting by \(\Id \) the identity matrix.

For future reference, it is worth recalling in more generality the structure of these transformations. We will do it briefly, for more details on the special orthogonal groups and their relation to rotations and vector products, you can refer to [MR99].

Consider, for some small \(\epsilon > 0\), a family of orthogonal matrices around the identity

\(\seteqnumber{0}{2.}{33}\)\begin{equation} \label {eq:familyson} A(s) \in SO(n), \quad A(0) = \Id , \quad |s| < \epsilon . \end{equation}

If we look at \(SO(n)\) as the smooth manifold in the space \(M^{n\times n}\) of \(n\times n\) matrices defined by the equations

\(\seteqnumber{0}{2.}{34}\)\begin{equation} A^T A = \Id ,\quad \det A = 1, \end{equation}

then its tangent space over the identity is

\(\seteqnumber{0}{2.}{35}\)\begin{equation} T_{\Id } SO(n) = \big \{X\in M^{n\times n}\mid X^T = -X\big \}, \end{equation}

the space of antisymmetric \(n\times n\) matrices.

This is a consequence of the following proposition.

-

Proposition 2.3. Let \(A(s)\) be a one–parameter family of orthogonal matrices of the form (2.34). Then,

\(\seteqnumber{0}{2.}{36}\)\begin{equation} \label {eq:form} A(s) = \Id + sX + O(s^2), \quad A^T(s)A(s) = \Id \end{equation}

and the matrix \(X\) is antisymmetric (\(X^T = - X\)). Moreover, the converse relation also holds: given an antisymmetric matrix \(X\), there is a one–parameter family of orthogonal matrices \(A(s)\) of the form (2.37).

-

Proof. The local form (2.37) is immediately obtained by expanding the family of matrices for small \(s\) and using the orthogonality condition \(A^TA = \Id \). This in turns implies

\(\seteqnumber{0}{2.}{37}\)\begin{align} \Id & = A^T(s) A(s) \\ & = \left (\Id + sX + O(s^2)\right )^T\left (\Id + sX + O(s^2)\right ) \\ & = \Id + s(X^T + X) + O(s^2), \end{align} which can hold only if \(X^T + X =0\).

Conversely, given \(X\) antisymmetric, the one–parameter family

\(\seteqnumber{0}{2.}{40}\)\begin{equation} A(s) := e^{sX} = \Id + s X + \frac {s^2}{2!} X^2 + \cdots \end{equation}

is orthogonal for all \(s\) by definition:

\(\seteqnumber{0}{2.}{41}\)\begin{equation} A^T(s)A(s) = e^{sX^T}e^{sX} = e^{-sX} e^{sX} = \Id . \end{equation}

□

-

Exercise 2.4 (Euler theorem). Given an antisymmetric matrix

\(\seteqnumber{0}{2.}{42}\)\begin{equation} X = (X_{ij})_{1\leq i,j\leq 3}, \end{equation}

show that, for sufficiently small \(s\in \mathbb {R}\) and modulo higher order corrections, the transformation

\(\seteqnumber{0}{2.}{43}\)\begin{equation} \vb *{x} \mapsto \vb *{x} + s X \vb *{x} + O(s^2) \end{equation}

is the rotation around the axis through the origin and parallel to \(\vb *{N} = (X_{32}, X_{13}, X_{21})\) of angle \(\delta \phi = s|\vb *{N}|\). For a hint, see [Arn89, Chpater 26.D].

In view of Exercise 2.4, Noether theorem tells us that the conserved quantity for a single particle system would be

\(\seteqnumber{0}{2.}{44}\)\begin{equation} I = \langle \vb *{p}, X\vb *{x}\rangle = \langle \vb *{p}, \vb *{N}\wedge \vb *{x}\rangle = \langle \vb *{N}, \vb *{x}\wedge \vb *{p} \rangle , \end{equation}

where for the second equality we used the relation between \(3\times 3\) skew-symmetric matrices and vector products, and for the last we used the cyclicity of the vector product. The quantity

\(\seteqnumber{0}{2.}{45}\)\begin{equation} \vb *{M} = \vb *{x} \wedge \vb *{p} \end{equation}

is called angular momentum.

\begin{equation} \vb *{M} = \sum _{k=1}^N \vb *{x}_k \wedge \vb *{p}_k \end{equation}

are conserved quantities.

-

Proof. On the configuration space \(\mathbb {R}^{3N}\) we consider the simultaneous rotation of all the point particles

\(\seteqnumber{0}{2.}{47}\)\begin{equation} (\vb *{x}_1,\ldots ,\vb *{x}_N) \mapsto (\vb *{x}_1,\ldots ,\vb *{x}_N) + s (X\vb *{x}_1,\ldots ,X\vb *{x}_N) + O(s^2). \end{equation}

This is associated to the vector field \((\vb *{x}_1,\ldots ,\vb *{x}_N) \mapsto (X\vb *{x}_1,\ldots ,X\vb *{x}_N)\) and, by Noether theorem, to the first integral

\(\seteqnumber{0}{2.}{48}\)\begin{equation} I_X = \sum _{k=1}^N \langle \vb *{p}_k, X\vb *{x}_k\rangle . \end{equation}

As mentioned above, an antisymmetric matrix \(X\) acts as the exterior product with a vector \(\vb *{X}\), and therefore

\(\seteqnumber{0}{2.}{49}\)\begin{align} I_X & = \sum _{k=1}^N \langle \vb *{p}_k, \vb *{X}\wedge \vb *{x}_k\rangle \\ & = \sum _{k=1}^N \langle \vb *{X}, \vb *{x}_k\wedge \vb *{p}_k\rangle \\ & = \langle \vb *{X}, \sum _{k=1}^N \vb *{x}_k\wedge \vb *{p}_k\rangle \\ & = \langle \vb *{X},\vb *{M}\rangle . \end{align}

The claim follows from the arbitrariness of \(\vb *{X}\). □

-

Example 2.4. Consider a natural lagrangian (1.59) with an axial symmetry, i.e., whose potential is invariant by simultaneous rotations around a fixed axis. For convenience let’s say that such axis corresponds to the \(z\) axis. Looking back at the proof above, we can infer that the conserved quantity will not be the full total momentum \(\vb *{M} = (M_x, M_y, M_z)\) but instead only its \(z\) component:

\(\seteqnumber{0}{2.}{53}\)\begin{equation} M_z = \sum _{k=1}^N m_k\left (x_k \dot y_k - y_k \dot x_k\right ) = \sum _{k=1}^N m_k r_k^2 \dot \phi _k. \end{equation}

In the last expression we used the cylindrical coordinates \((r, \phi , z)\) to emphasize that the angular momentum is the analogue of the kinetic momentum but involving an angular velocity.

Also, you can see that the angular momentum depends quadratically on the distance from the rotational axis. Its conservation means that if a point particle is moving closer to the symmetry axis, it needs to spin faster and faster.

2.1.3 Homogeneity of time: the energy

We discussed homogeneity of space and isotropy, and discovered that they are related to the conservation of different types of momentum. One could now ask, what about homogeneity of time?

Homogeneity of time means that \(L\) is invariant with respect to the transformation \(t\mapsto t+s\), which in turn means \(\frac {\partial L}{\partial t} = 0\). We already saw in Section 1.3.2 that for natural lagrangians this corresponds to conservation of energy! In other words, Time is to Energy as Space is to Momentum.

If you have taken a course on special relativity, you have probably seen that the combination of energy and \(3\)-momentum forms a \(4\)-vector which rotates under space-time transformations. You don’t have to be Einstein to make the connection: the link between energy-momentum and time-space exists also in Newtonian mechanics.

2.1.4 Scale invariance: Kepler’s third law

We have mentioned in Remark 1.4 that the two lagrangians \(L\) and \(\alpha L\), \(\alpha \neq 0\), give rise to the same equations of motion. This does not look like a symmetry in our sense, but it is worth investigating its meaning in light of what we have seen so far.

-

Theorem 2.5. Let \(L\) be a natural lagrangian \(L=T-U\) as in (1.59) whose potential is a homogeneous function of degree \(k\):

\(\seteqnumber{0}{2.}{54}\)\begin{equation} U(\lambda \vb *{x}_1, \ldots , \lambda \vb *{x}_N) = \lambda ^k U(\vb *{x}_1, \ldots , \vb *{x}_N) \qquad \forall \lambda >0. \end{equation}

Then the equations of motion are scale invariant with respect to the transformation

\(\seteqnumber{0}{2.}{55}\)\begin{equation} t \mapsto \lambda ^{1-\frac {k}2} t, \qquad \vb *{x}_k \mapsto \lambda \vb *{x}_k \quad k=1,\ldots ,n. \end{equation}

-

Proof. The transformation

\(\seteqnumber{0}{2.}{56}\)\begin{equation} t \mapsto \mu t, \qquad \vb *{x}_k \mapsto \lambda \vb *{x}_k \quad k=1,\ldots ,n, \end{equation}

dilates the speeds as

\(\seteqnumber{0}{2.}{57}\)\begin{equation} \dv {t} \vb *{x}_k \mapsto \frac {\lambda }{\mu } \dv {t}\vb *{x}_k. \end{equation}

Therefore

\(\seteqnumber{0}{2.}{58}\)\begin{equation} T \mapsto \frac {\lambda ^2}{\mu ^2} T, \qquad U \mapsto \lambda ^k U. \end{equation}

For \(\mu = \lambda ^{1-\frac {k}2}\) we have

\(\seteqnumber{0}{2.}{59}\)\begin{equation} L \mapsto \lambda ^k T - \lambda ^k U = \lambda ^k L \end{equation}

and thus the two lagrangian have the same equations of motion. □

-

Example 2.5 (Harmonic oscillator). The potential of the harmonic oscillator

\(\seteqnumber{0}{2.}{60}\)\begin{equation} L = \frac {\dot x^2}2 - \omega ^2 x^2 \end{equation}

is homogeneous of degree \(2\). In this case \(1-\frac k2 = 0\) so the period of oscillation does not depend on the amplitude of the oscillation, as we had already seen in a previous example.

-

Example 2.6 (Kepler’s third law). Recall Kepler’s problem lagrangian from Examples 1.2 and 1.6:

\(\seteqnumber{0}{2.}{61}\)\begin{equation} L = \frac {m \dot {\vb *{x}}^2}{2} + \frac {\alpha }{\|\vb *{x}\|}, \quad \alpha >0. \end{equation}

In this case, the potential is homogeneous of degree \(-1\). Therefore \(1-k/2 = 3/2\), which means that the planet orbit is invariant with respect to the transformations

\(\seteqnumber{0}{2.}{62}\)\begin{equation} \vb *{x} \mapsto \lambda ^2 \vb *{x}, \qquad t \mapsto \lambda ^3 t. \end{equation}

This should remind you of Kepler’s third law: the square of the orbital period of a planet is directly proportional to the cube of the semi–major axis of its orbit, in symbols \(\frac {T^2}{X^3} \sim \mathrm {const}\).

2.2 The spherical pendulum

Let’s come back to the spherical pendulum, introduced in Example 1.13. Assume it moves in the presence of constant gravitational acceleration \(g\) pointing downwards. It’s an exercise in trigonometry to see that the lagrangian in spherical coordinates takes the form

\(\seteqnumber{0}{2.}{63}\)\begin{equation} L = \frac m2 l^2 \left (\dot \theta ^2 + \dot \phi ^2 \sin ^2\theta \right ) + mgl \cos \theta . \end{equation}

We can immediately see that \(\phi \) is a cyclic coordinate, as such the corresponding kinetic momentum

\(\seteqnumber{0}{2.}{64}\)\begin{equation} \label {eq:spI} I = \frac {\partial L}{\partial \dot \phi } = m l^2 \dot \phi \sin ^2 \theta \end{equation}

is a constant of motion. With our choice coordinates, \(I\) corresponds to the component of the angular momentum in the \(\phi \) direction. We can now use (2.65) to replace all the appearances of \(\dot \phi \) with

\(\seteqnumber{0}{2.}{65}\)\begin{equation} \dot \phi = \frac {I}{ml^2 \sin ^2\theta }. \end{equation}

Computing the remaining equation of motion for \(\theta \), we get

\(\seteqnumber{0}{2.}{66}\)\begin{equation} ml^2 \ddot \theta = ml^2 \dot \phi ^2\sin \theta \cos \theta - mgl \sin \theta = -ml^2\frac {\partial V_{\mathrm {eff}}}{\partial \theta } \end{equation}

where

\(\seteqnumber{0}{2.}{67}\)\begin{equation} V_{\mathrm {eff}} = -\frac {g}l\cos \theta + \frac {I^2}{2m^2l^4}\frac 1{\sin ^2\theta }. \end{equation}

We are left with one-degree of freedom in \(\theta \), thus we can integrate by quadrature using the energy. Indeed, the conservation of energy translates into

\(\seteqnumber{0}{2.}{68}\)\begin{equation} E = \frac {\dot \theta ^2}2 + V_{\mathrm {eff}}(\theta ), \end{equation}

where \(E>\min _\theta V_{\mathrm {eff}}(\theta )\) is a constant, and therefore

\(\seteqnumber{0}{2.}{69}\)\begin{equation} \label {eq:sptheta} t - t_0 = \frac 1{\sqrt 2}\int \frac {\dd \theta }{\sqrt {E - V_{\mathrm {eff}}(\theta )}} \end{equation}

Thanks to (2.65), we can use (2.70) to solve for \(\phi \):

\(\seteqnumber{0}{2.}{70}\)\begin{equation} \phi = \int \frac {I}{ml^2} \frac 1{\sin ^2\theta } \;\dd t = \frac {I}{\sqrt {2} m l^2} \int \frac 1{\sin ^2\theta } \frac {1}{\sqrt {E - V_{\mathrm {eff}}(\theta )}}\; \dd \theta \end{equation}

Close to a minimum of the effective potential \(V_{\mathrm {eff}}\), we have a bounded oscillatory motion in \(\theta \) while the constant sign of \(\dot \phi \) implies that the particle keeps spinning with various degrees of acceleration in the \(\phi \) direction.

At a minimum \(\theta _0\) of the effective potential, which occurs by a careful balancing of the energy and the angular momentum, we have a stable orbit. Looking at small oscillations around this point as we did for the simple pendulum, one can prove that small oscillations about \(\theta = \theta _0\) have a frequency \(\omega ^2 = \frac {\partial ^2 V_{\mathrm {eff}}(\theta _0)}{\partial \theta ^2}\).

As you can see, the existence of two integral of motion allowed us to practically solve the equations of motion and describe many details of the behavior of the system.

This is a far more general statement that we will see again at work for the Kepler’s problem and for motions in central potentials, and that, once we introduce hamiltonian systems, will be at the core of integrable systems and perturbation theory.

2.3 Intermezzo: small oscillations

We have already seen a few cases in which, by a small perturbation near a stable equilibrium, we could describe with various degrees of accuracy the behavior of mechanical systems in terms of the motion of an harmonic oscillator.

In this section we will see that this phenomenon is much more general (and useful) by showing that the motion of systems close to equilibrium is described by a number of decoupled simple harmonic oscillators, each ringing at a different frequency.

Let’s revisit what we saw in Sections 1.3.1 and 1.3.3 in slightly more generality and in a more organic way. Let’s consider the Newton equation for a closed system with one degree of freedom in the presence of conservative forces, i.e., an equation like (1.72):

\(\seteqnumber{0}{2.}{71}\)\begin{equation} \ddot x = F(x). \end{equation}

As we saw in Example 1.10, it makes sense to ask ourselves what does the motion look like around an equilibrium point \(x^*\), i.e., a point such that \(F(x^*) = 0\). To analyze this in more details, we linearize the equation. Let \(x(t)\) be a small perturbation around \(x^*\). For convenience,

\(\seteqnumber{0}{2.}{72}\)\begin{equation} x(t) = x^* + \gamma (t), \end{equation}

where \(\gamma \) is small so that we can expand \(F\) in Taylor series around \(x^*\), getting

\(\seteqnumber{0}{2.}{73}\)\begin{equation} \ddot \gamma = \frac {\dd F}{\dd x}(x^*)\gamma + O(\gamma ^2). \end{equation}

Neglecting the higher order terms, we can see that there are three possibilities.

-

• If \(\frac {\dd F}{\dd x}(x^*) < 0\), the system behaves like a spring (1.5) with \(\omega _0^2 = -\frac {\dd F}{\dd x}(x^*)\). The general solution (1.6) tells us that the system undergoes stable oscillations around \(x^*\) with frequency \(\omega _0\) (and period \(\tau _0 = 2\pi /\omega _0\)).

-

• If, on the other hand, \(\frac {\dd F}{\dd x}(x^*) > 0\), the force is pushing the system away from the equilibrium: the solution is of the form \(\gamma (t) = C_+ e^{\lambda t} + C_- e^{-\lambda t}\), for some integration constants \(C_+\) and \(C_-\) and with \(\lambda ^2 = \frac {\dd F}{\dd x}(x^*)\). There are now two possibilities: either \(C_+ = 0\) and \(x(t) \to x^*\) as \(t\) grows, or \(x\) grows exponentially and, in a short time, leaves the region of good approximation. In this last case the system is said to be linearly unstable.

-

• Finally, there is the case \(\frac {\dd F}{\dd x}(x^*) = 0\), corresponding to a neutral equilibrium: the solution is a uniform motion of the form \(\gamma (t) = C_0 + C_1 t\). We will come back to this case in a couple of sections.

If we generalize the discussion above to \(n\) degrees of freedom, and we consider a natural lagrangian

\(\seteqnumber{0}{2.}{74}\)\begin{equation} L(q,\dot q) = \frac 12 g_{ij} \dot q^i \dot q^j - U(q^1, \ldots , q^n), \end{equation}

the equilibrium condition translates to the fact that \(q_* = (q_*^1, \ldots , q_*^n)\) must satisfy

\(\seteqnumber{0}{2.}{75}\)\begin{equation} \frac {\partial U}{\partial q^k} (q_*) = 0 \quad k=1,\ldots ,n. \end{equation}

To study its stability we now need to consider the hessian of the potential around \(q_*\):

\(\seteqnumber{0}{2.}{76}\)\begin{equation} h = (h_{ij}),\quad h_{ij} = \frac {\partial ^2 U}{\partial q^i\partial q^j} (q_*). \end{equation}

Neglecting the higher order terms in the Taylor expansion of the polynomial, the linearized system near to the equilibrium point is given by the lagrangian [Arn89, Chapter 22.D]

\(\seteqnumber{0}{2.}{77}\)\begin{equation} \label {eq:genlagso} L_0 = \frac 12 g_{ij} \dot q^i \dot q^j - \frac 12 h_{ij} q^i q^j. \end{equation}

Motions in a linearized system \(L_0\) near an equilibrium point \(q =q_*\) are called small oscillations. In a one-dimensional problem the numbers \(\tau _0\) and \(\omega _0\) are called period and frequency of the small oscillations.

Unfortunately the Euler-Lagrange equations look horrible compared to the one dimensional case... unless we find a way to simultaneously diagonalize \(g\) and \(h\).

As you may have guessed, linear algebra comes to the rescue: given two symmetric matrices \(h,g\), such that \(g\) is positive definite, there exists an invertible matrix \(\Gamma \) such that

\(\seteqnumber{0}{2.}{78}\)\begin{equation} \Gamma ^T g \Gamma = I, \quad \Gamma ^T h \Gamma = \diag (\lambda _1, \ldots , \lambda _n), \end{equation}

where \(\lambda _1, \ldots , \lambda _n\) are the roots of the characteristic polynomial [Arn89, Chapter 23.A]

\(\seteqnumber{0}{2.}{79}\)\begin{equation} \label {eq:charso} \det (h - \lambda g) = 0. \end{equation}

In the new coordinates \(\widetilde q = \Gamma q\), the lagrangian \(L_0\) takes the form

\(\seteqnumber{0}{2.}{80}\)\begin{equation} L_0(\widetilde q, \dot {\widetilde q}) = \frac 12 \sum _{k=1}^n \left (\|\dot {\widetilde q}^k\|^2 - \lambda _k (\widetilde q^k)^2 \right ), \end{equation}

whose equations of motion read

\(\seteqnumber{0}{2.}{81}\)\begin{equation} \ddot {\widetilde q}^k = -\lambda _k \widetilde q^k. \end{equation}

We have proven the following theorem.

In view of the observations above, the solution \(\widetilde q(t) = q^*\) is stable if and only if all the eigenvalues \(\lambda _1, \ldots , \lambda _n\) are positive:

\(\seteqnumber{0}{2.}{82}\)\begin{equation} \lambda _i = \omega _i^2, \quad \omega _i > 0. \end{equation}

The periodic motions corresponding to positive eigenvalues \(\lambda _i\) are called characteristic oscillations (or principal oscillations or normal modes) of the system (2.78), the corresponding number \(\omega \) is called its characteristic frequency.

In fact, by the nature of \(\Gamma \), the coordinate system \(\widetilde q\) is orthogonal with respect to the scalar product \((g\dot q, \dot q)_{\mathbb {R}^n}\) and therefore the theorem above can be restated as

-

Theorem 2.7. The system (2.78) has \(n\) characteristic oscillations, the directions of which are pairwise orthogonal with respect to the scalar product given by the kinetic energy.

Which immediately implies the following.

As well exemplified from Example 1.12, a sum of characteristic oscillations is generally not periodic!

2.3.1 Decomposition into characteristic oscillations

In view of what we have seen, we can write the characteristic oscillations in the form \(q(t) = e^{i\omega t} \vb *{\xi }\), then by substituting in the Euler-Lagrange equations,

\(\seteqnumber{0}{2.}{83}\)\begin{equation} \dv {t} g \dot q = h q, \end{equation}

we find

\(\seteqnumber{0}{2.}{84}\)\begin{equation} (h - \omega ^2 g)\vb *{\xi } = 0. \end{equation}

Solving (2.80), we find the \(n\) eigenvalues \(\lambda _k = \omega _k^2\), \(k=1, \ldots , n\), to which we can associate \(n\) mutually-orthogonal eigenvectors \(\vb *{\xi }_k\), \(k=1, \ldots , n\).

If \(\lambda \neq 0\) a general solution then has the form

\(\seteqnumber{0}{2.}{85}\)\begin{equation} q(t) = \mathbb {R}e \sum _{k=1}^n C_k e^{i \omega _k t}\vb *{\xi }_k, \end{equation}

even in the case some eigenvalues come with multiplicities.

In conclusion, to decompose a motion into characteristic oscillations, it’s enough to project the initial conditions onto the characteristic directions, i.e., the eigenvectors of \(h - \lambda g\), and solve the corresponding one-dimensional problems.

More on small oscillations and their applications, with plenty of examples, can be found in [Arn89, Chapters 23–25].

2.3.2 The double pendulum

Let’s revisit the double pendulum, already introduced in Example 1.11. For simplicity, let’s assume that the masses are the same, \(m = m_1 = m_2\), as well as the two lengths \(l = l_1 = l_2\). The full lagrangian then reads

\(\seteqnumber{0}{2.}{86}\)\begin{equation} L = m l^2 \dot x_1^2 + \frac m2 l^2 \dot x_2^2 + m l^2 \cos (x_1 -x_2)\dot x_1 \dot x_2 + 2ml g \cos x_1 + mlg\cos x_2. \end{equation}

One can check that the stable equilibrium point is \(x_1 = x_2 = 0\). Linearizing the lagrangian around this point and dropping the (irrelevant) constant terms, we obtain:

\(\seteqnumber{0}{2.}{87}\)\begin{equation} L_0 = m l^2 \dot x_1^2 + \frac m2 l^2 \dot x_2^2 + m l^2 \dot x_1 \dot x_2 - ml g x_1^2 - \frac 12 mlg x_2^2. \end{equation}

The corresponding Euler-Lagrange equations are then given by

\(\seteqnumber{0}{2.}{88}\)\begin{align} & 2ml^2 \ddot x_1 + ml^2 \ddot x_2 = -2mgl x_1 \\ & ml^2 \ddot x_1 + ml^2 \ddot x_2 = -mgl x_2, \end{align} which in matrix form becomes

\(\seteqnumber{0}{2.}{90}\)\begin{equation} \begin{pmatrix}2&1\\1&1\end {pmatrix} \ddot {\vb *{x}} = - \frac gl \begin{pmatrix}2&0\\0&1\end {pmatrix} \vb *{x}. \end{equation}

Inverting the left hand side of the equations of motion to end up with

\(\seteqnumber{0}{2.}{91}\)\begin{equation} \label {eq:doublep} \ddot {\vb *{x}} = - \frac gl \begin{pmatrix}2&-1\\-2&2\end {pmatrix} \vb *{x}. \end{equation}

Diagonalizing the matrix on the right hand side, is equivalent to perform the transformation shown in Section 2.3.1.

The matrix in (2.92) has eigenvalues \(\lambda _\mp = \omega _\mp ^2 = \frac {g}l \left (2\mp \sqrt {2}\right )\), the square of the normal frequencies, with corresponding (non normalized) eigenvectors, the normal modes, \(\vb *{\xi }_\mp = \left (1, \pm \frac 1{\sqrt {2}}\right )^T\). The corresponding motions are then given by

\(\seteqnumber{0}{2.}{92}\)\begin{equation} \vb *{x}(t) = C_+ \cos (\omega _+ (t - t_0)) \vb *{\xi }_+ + C_- \cos (\omega _- (t - t_0)) \vb *{\xi }_-. \end{equation}

Note that the oscillation frequency for the mode in which the rods oscillate in the same direction, \(\vb *{\xi }_-\), is lower than the frequency of the mode in which they are out of phase, \(\vb *{\xi }_+\).

2.3.3 The linear triatomic molecule

Let’s consider the example of a linear triatomic molecule (e.g., carbon dioxide \(CO_2\)). This consists of a central atom of mass \(M\) flanked symmetrically by two identical atoms of mass \(m\). For simplicity, let’s assume that the atoms are aligned and the motion of each atom happens only in the direction parallel to the molecule.

If \(x_i\), \(i=1,2,3\), denote the displacement of the atoms in the molecule, the lagrangian for such object takes the form,

\(\seteqnumber{0}{2.}{93}\)\begin{equation} L = \frac {m\dot x_1^2}2 + \frac {M\dot x_2^2}2 + \frac {m\dot x_3^2}2 - V(x_1 - x_2) - V(x_2 - x_3). \end{equation}

The function \(V\) is, in general, a complicated interatomic potential, however this will not matter for us, as we are interested here in the oscillations around the equilibrium. Indeed, assume \(x_i = x_i^*\) is an equilibrium position. By symmetry, we have \(|x_1^*-x_2^*| = |x_2^* - x_3^*| = r_*\). Taylor expanding the potential around the equilibrium point and dropping the \(O\left (|r-r_*|^3\right )\) terms, we get

\(\seteqnumber{0}{2.}{94}\)\begin{equation} V(r) = V(r_*) + \frac {\partial V}{\partial r}\Big |_{r=r_*}(r-r_*) + \frac 12 \frac {\partial ^2 V}{\partial r^2}\Big |_{r=r_*}(r-r_*)^2. \end{equation}

The first term is a constant and can be ignored, while the second term – being \(r_*\) an equilibrium – vanishes. Calling \(k = \frac {\partial ^2 V}{\partial r^2}\big |_{r=r_*}\) and \(q_i = x_i - x_i^*\), we end up with the lagrangian

\(\seteqnumber{0}{2.}{95}\)\begin{equation} L = \frac {m \dot q_1}{2} + \frac {M \dot q_2}{2} + \frac {m\dot q_3^2}2 - \frac k2 \left ((q_1 - q_2)^2 + (q_2 - q_3)^2\right ), \end{equation}

where as usual we omitted the higher order corrections.

Recalling our notation, we are left with

\(\seteqnumber{0}{2.}{96}\)\begin{equation} g = \begin{pmatrix}m&0&0\\0&M&0\\0&0&m\end {pmatrix}, \quad h = k\begin{pmatrix}1&-1&0\\-1&2&-1\\0&-1&1\end {pmatrix}. \end{equation}

The normal modes and their frequencies are then obtained from the pairs of eigenvalues and eigenvectors of \(h - \omega ^2 g\). In this case, they can be easily computed as:

-

• \(\lambda _1 = 0\), \(\vb *{\xi }_1 = (1,1,1)^T\): this is not an oscillation, instead it corresponds to a translation of the molecule.

-

• \(\lambda _2 = \frac km\), \(\vb *{\xi }_2 = (1,0,-1)^T\): the external atoms oscillate out of phase with frequency \(\omega _2 = \sqrt {\frac km}\), while the central one remains stationary.

-

• \(\lambda _3 = \frac km \left (1+2 \frac mM\right )\), \(\vb *{\xi }_3 = \left (1,-2\frac mM,1\right )^T\). This oscillation is more involved: the external atoms oscillate in the same direction, while the central atom moves in the opposite direction.

The corresponding small oscillations are given, in general, as the linear combinations of the normal modes above:

\(\seteqnumber{0}{2.}{97}\)\begin{equation} \vb *{x}(t) = \vb *{x}^* + (C_1 + \widetilde C_1 t) \vb *{\xi }_1 + C_2 \cos (\omega _2 (t-t_2)) \vb *{\xi }_2 + C_3 \cos (\omega _3 (t-t_3)) \vb *{\xi }_3. \end{equation}

2.3.4 Zero modes

Recall Noether theorem (Theorem 2.2): every continuous one–parameter group of symmetries \(\Phi _s = Q(s,q)\) is associated to an integral of motion \(I = p_i \frac {\partial Q^i}{\partial s}\big |_{s=0}\).

Assume that we have \(L\) one–parameter families \(\Phi ^l_s = Q_l(s,q)\), \(l=1,\ldots ,L\). For small oscillations near an equilibrium \(q_*\), let’s write \(q^k = q^k_* + \vb *{\eta }^k\). Then the \(L\) first integrals can be rewritten as

\(\seteqnumber{0}{2.}{98}\)\begin{equation} I_l = C_{li} \dot \eta ^i,\quad l=1,\ldots , L, \qquad \mbox {where}\qquad C_{li} = g_{ij} \frac {\partial Q^j_l}{\partial s}\Big |_{s=0}. \end{equation}

Therefore, we can define the (non normalized) normal modes

\(\seteqnumber{0}{2.}{99}\)\begin{equation} \vb *{\xi }_l = C_{li} \vb *{\eta }^i, \quad l=1,\ldots , L, \end{equation}

which satisfy

\(\seteqnumber{0}{2.}{100}\)\begin{equation} \ddot {\vb *{\xi }}_l = 0, \quad l=1,\ldots , L. \end{equation}

Thus, in systems with continuous symmetries, to each such continuous symmetry there is an associated zero mode, i.e., a mode with \(\omega _l = 0\), of the small oscillations problem.

-

Remark 2.8. We have already seen an example of this in Section 2.3.3: the normal mode \(\vb *{\xi }_1=(1,1,1)^T\) of the triatomic molecule is the zero mode corresponding to the invariance of the system with respect to horizontal translations.

We will revisit small oscillations once we discuss perturbation theory in the context of Hamiltonian systems. Before introducing the necessary formalism and discussing integrability and perturbation theory, we will spend some time looking at an important family of examples in light of our newly acquired knowledge.

2.4 Motion in a central potential

-

Example 2.7 (The two-body problem). Let’s go back to Examples 1.2 and 1.6. Consider a closed systems of two point particles with masses \(m_{1,2}\). We now know that, in an inertial frame of reference, their natural lagrangian must have the form

\(\seteqnumber{0}{2.}{101}\)\begin{equation} L = \frac {m_1 \|\dot {\vb *{x}}_1\|^2}{2} + \frac {m_2 \|\dot {\vb *{x}}_2\|^2}{2} - U(\|\vb *{x}_1 - \vb *{x}_2\|). \end{equation}

We have anticipated that such systems possesses many first integrals, so we can expect to be able to use them to integrate the equations of motion as in some previous examples. If we fix the origin in the barycenter of the system, we get

\(\seteqnumber{0}{2.}{102}\)\begin{equation} m_1 \vb *{x}_1 + m_2 \vb *{x}_2 = 0. \end{equation}

This allows us to express the coordinates in terms of a single vector:

\(\seteqnumber{0}{2.}{103}\)\begin{equation} \label {eq:barycoords} \vb *{x} := \vb *{x}_1 - \vb *{x}_2, \qquad \vb *{x}_1 = \frac {m_2}{m_1 + m_2} \vb *{x}, \qquad \vb *{x}_2 = -\frac {m_1}{m_1 + m_2} \vb *{x}. \end{equation}

We can then use (2.104) and rewrite the equations of motion

\(\seteqnumber{0}{2.}{104}\)\begin{equation} m_1 \ddot {\vb *{x}}_1 = -\frac {\partial U}{\partial \vb *{x}_1}, \qquad m_2 \ddot {\vb *{x}}_2 = -\frac {\partial U}{\partial \vb *{x}_2}, \end{equation}

into the single equation

\(\seteqnumber{0}{2.}{105}\)\begin{equation} m \ddot {\vb *{x}} = -\nabla U(\|\vb *{x}\|), \quad m = \frac {m_1m_2}{m_1+m_2}. \end{equation}

These are the equations of motion of a point particle in a central potential \(U(\|\vb *{x}\|)\), i.e., with lagrangian

\(\seteqnumber{0}{2.}{106}\)\begin{equation} \label {eq:centralpot} L = \frac {m \|\dot {\vb *{x}}\|^2}{2} - U(\|\vb *{x}\|). \end{equation}

After solving the equations of motion of this system with three degrees of freedom, one can recover the dynamic of the original two bodies, i.e., a system with six degrees of freedom, using (2.104).

In the remainder of this section, we will study the symmetries of systems of the form (2.107) to study its dynamics.

We know from Section 2.1.2, that angular momentum is a first integral of rotationally symmetric lagrangians. This means that the lagrangian (2.107) has three constants of motion given by the three components of the angular momentum

\(\seteqnumber{0}{2.}{107}\)\begin{equation} \vb *{M} = \vb *{x}\wedge \vb *{p}. \end{equation}

For convenience, let’s rewrite the equations of motion for (2.107) as

\(\seteqnumber{0}{2.}{108}\)\begin{equation} m\ddot {\vb *{x}} = - U'(x)\frac {\vb *{x}}{x}, \quad x = \|\vb *{x}\|, \quad U'(x)=\frac {\dd U(x)}{\dd x}. \end{equation}

-

Theorem 2.9. The trajectories of (2.107) are planar.

-

Proof. By construction, the constant vector \(\vb *{M}\) is orthogonal to \(\vb *{x}\) and \(\vb *{p} = m \dot {\vb *{x}}\). Therefore, the point moves in the plane

\(\seteqnumber{0}{2.}{109}\)\begin{equation} \label {eq:planarCP} \left \{\mathbf {y} \;\mid \; \langle M, \mathbf {y}\rangle = \mathrm {const} \right \}. \end{equation}

□

Introducing polar coordinates \((r,\phi )\) in the plane (2.110), our lagrangian transforms to

\(\seteqnumber{0}{2.}{110}\)\begin{equation} L = \frac {m}{2} \left (\dot r^2 + r^2 \dot \phi ^2\right ) - U(r), \end{equation}

i.e., a system of two-degrees of freedom with a cyclic coordinate \(\phi \). If we denote \(z\) the component parallel to the angular momentum, the first integral corresponding to \(\phi \) is

\(\seteqnumber{0}{2.}{111}\)\begin{equation} \label {eq:cyclicphi} \frac {\partial L}{\partial \dot \phi } = m r^2 \dot \phi = M_z. \end{equation}

-

Exercise 2.6. Use the conservation law

\(\seteqnumber{0}{2.}{112}\)\begin{equation} m r^2 \dot \phi = \mathrm {const} \end{equation}

to prove the Kepler’s second law: the area swept by the position vector of a point particle in a central potential in the time interval \([t, t+\delta t]\) does not depend on \(t\). Which is a rephrasing of the better known: a line segment joining a planet and the Sun sweeps out equal areas during equal intervals of time.

Hint: how do you compute the area of the sector?

-

Proof. It follows from the conservation of (2.112),

\(\seteqnumber{0}{2.}{113}\)\begin{equation} \label {eq:cyclicphi1} m r^2 \dot \phi = M = \mathrm {const}, \end{equation}

that the energy can be rewritten in the form

\(\seteqnumber{0}{2.}{114}\)\begin{equation} \label {eq:clawcentral} E = \frac m2 \left (\dot r^2 + r^2 \dot \phi ^2\right ) + U(r) = \frac {m \dot r^2}2 + \frac {M^2}{2m r^2} + U(r). \end{equation}

Therefore,

\(\seteqnumber{0}{2.}{115}\)\begin{equation} \dot r = \sqrt {\frac 2m(E-U(r)) - \frac {M^2}{m^2 r^2}}, \end{equation}

which means

\(\seteqnumber{0}{2.}{116}\)\begin{equation} \label {eq:timedep} \dd t = \frac {\dd r}{\sqrt {\frac 2m(E-U(r)) - \frac {M^2}{m^2 r^2}}}. \end{equation}

At the same time, (2.112), implies that

\(\seteqnumber{0}{2.}{117}\)\begin{equation} \dd \phi = \frac {M}{m r^2} \dd t, \end{equation}

and, therefore, we can determine \(\phi (r)\) from

\(\seteqnumber{0}{2.}{118}\)\begin{equation} \label {eq:deltaphi} \phi (r) - \phi _0 = \int _{r_0}^r \frac {\frac {M}{\rho ^2}}{\sqrt {2m(E-U(\rho )) - \frac {M^2}{\rho ^2}}}\;\dd \rho . \end{equation}

□

The final form of the energy (2.115) has a deep meaning. It is the same as the energy of a closed mechanical systems with one degree of freedom under the influence of the effective potential

\(\seteqnumber{0}{2.}{119}\)\begin{equation} \label {eq:effpotcp} U_{\mathrm {eff}}(r) := U(r) + \frac {M^2}{2 m r^2}. \end{equation}

In other words, we reduced the description of the radial motion to a one-dimensional closed system with natural lagrangian

\(\seteqnumber{0}{2.}{120}\)\begin{equation} \label {eq:efflagcp} L = \frac {m \dot r^2}{2} - U_{\mathrm {eff}}(r). \end{equation}

Given two consecutive roots \(r_m\), \(r_M\) of the equation \(U_{\mathrm {eff}}(r) = E\), the radial motion is bounded to stay in the potential well \(r_m \leq r \leq r_M\). In the plane, this translates into being confined into an annular region as depicted in Figure 2.1.

-

Exercise 2.7. Consider the integral (2.119), with \(r_0 = r_m\) and \(r = r_M\), one can use it to define the quantity

\(\seteqnumber{0}{2.}{121}\)\begin{equation} \label {eq:Dektaphi} \Delta \phi = 2\int _{r_m}^{r_M} \frac {\frac {M}{\rho ^2}}{\sqrt {2m(E-U(\rho )) - \frac {M^2}{\rho ^2}}}\;\dd \rho . \end{equation}

Prove that the motion is periodic if and only if

\(\seteqnumber{0}{2.}{122}\)\begin{equation} \Delta \phi = 2\pi \frac mn, \quad m,n\in \mathbb {Z}. \end{equation}

2.4.1 Kepler’s problem

Let’s go back to Example 2.7, and replace \(U\) with the Newtonian gravitational potential \(k/\|\vb *{x}\|\) (see Examples 1.2 and 1.6). The effective potential (2.120), in this case is

\(\seteqnumber{0}{2.}{123}\)\begin{align} U_{\mathrm {eff}} (r) = -\frac kr + \frac {M^2}{2 m r^2}, \quad & k = G \frac {m_1^2 m_2^2}{m_1+m_2} > 0, \\ & m = \frac {m_1m_2}{m_1+m_2}, \quad M := M_z. \end{align} It’s an exercise in calculus now, to see what this potential looks like. The effective potential has a vertical asymptote at \(r=0\), where it blows up to \(+\infty \), and the horizontal asymptote \(y=0\) for \(r\to +\infty \). Finally, the potential presents a critical point, corresponding to a global minimum,

\(\seteqnumber{0}{2.}{125}\)\begin{equation} U_{\mathrm {eff}}^* := U_{\mathrm {eff}}\left (\frac {M^2}{k m}\right ) = - \frac {k^2m}{2M^2}, \end{equation}

after which the potential grows approaching \(0\) as \(r\to +\infty \).

In terms of phase portrait, this means that the motion is bounded if and only if

\(\seteqnumber{0}{2.}{126}\)\begin{equation} - \frac {k^2 m}{2 M^2} < E < 0, \end{equation}

and it is unbounded for \(E \geq 0\).

The shape of the trajectory is then given by (2.119), which is explicitly solvable in this case:

\(\seteqnumber{0}{2.}{127}\)\begin{equation} \phi = \arccos \left (\frac {\frac {M}{r}-\frac {km}{M}}{\sqrt {2mE + \frac {k^2m^2}{M^2}}}\right ) + C, \end{equation}

where the constant depends on the initial conditions. Choosing \(C=0\), we can rewrite the equation of the trajectory as

\(\seteqnumber{0}{2.}{128}\)\begin{equation} \label {eq:KeplerConic} r = \frac {p}{1+e\,\cos \phi }, \qquad p:=\frac {M^2}{km}, \quad e:=\sqrt {1+\frac {2EM^2}{k^2 m}}, \end{equation}

which is the equation of a conic section with a focus at the origin, focal parameter \(p\) and eccentricity \(e\), in agreement with Kepler’s first law. Choosing \(C=0\), means that we have chosen the angle \(\phi \) such that \(\phi =0\) corresponds to the perihelion of the trajectory, i.e., the closest point to the focus.

We can now confirm the last claim in Example 1.2. Indeed, with some knowledge about conic sections one can conclude that (2.129) is an ellipse for \(E<0\), when \(0<e<1\). The semiaxes of the ellipse are then given by

\(\seteqnumber{0}{2.}{129}\)\begin{equation} a = \frac {p}{1-e^2}, \quad b = \frac {p}{\sqrt {1-e^2}}. \end{equation}

Furthermore, when \(E=0\), i.e., \(e = 1\), we have a parabola and, finally, for \(E>0\), i.e., \(e >1\) an hyperbola.

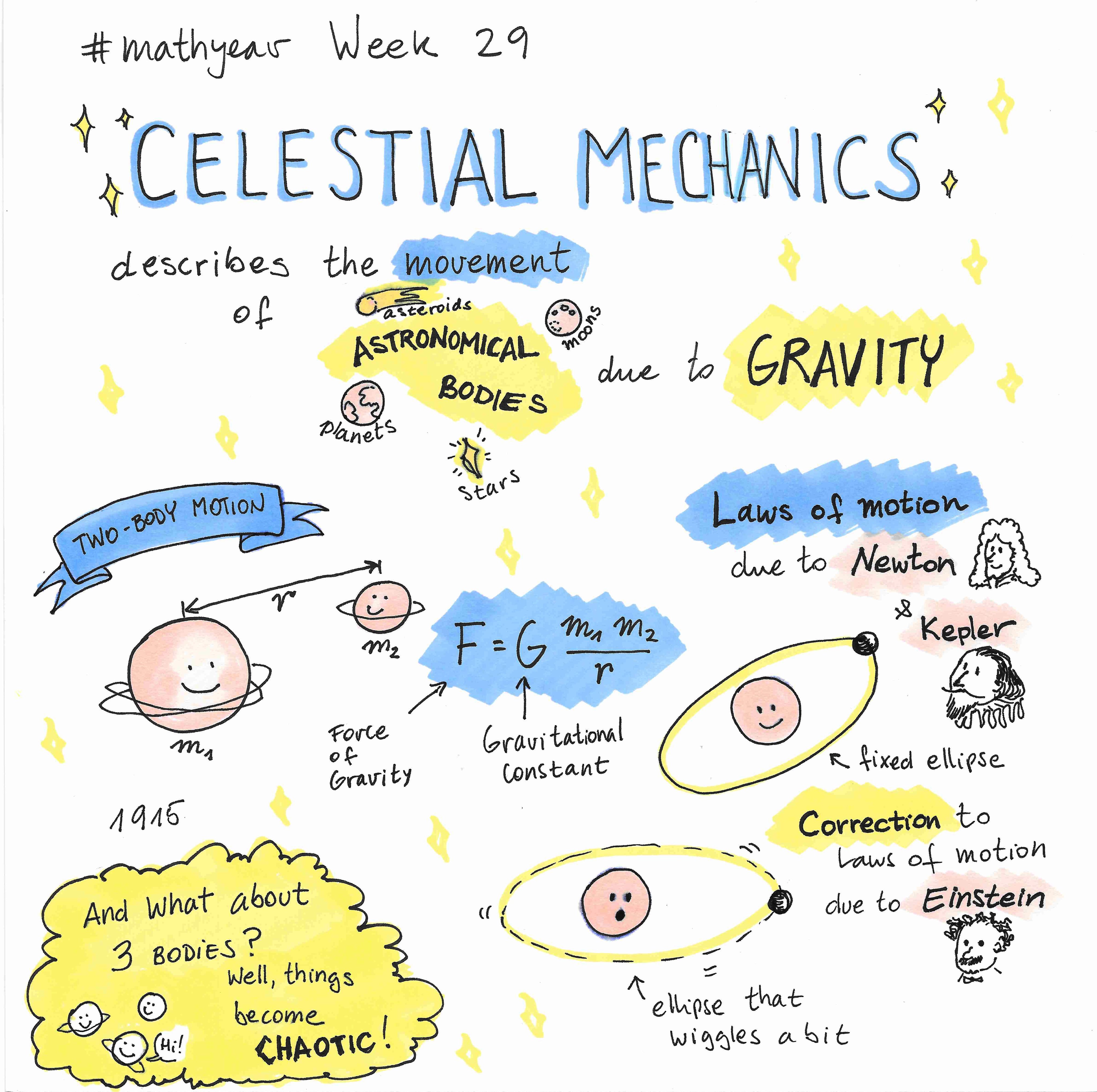

Figure 2.3: A graphical introduction to Celestial Mechanics, courtesy of Constanza Rojas- Molina. If you feel creative, consider contributing to the #mathyear challenge.

We can also look at the time one body spends along parts of the orbit by looking at (2.117):

\(\seteqnumber{0}{2.}{130}\)\begin{equation} t = \sqrt {\frac {m}{2}} \int \frac {\dd r}{\sqrt {E - U_{\mathrm {eff}}(r)}}, \quad U_{\mathrm {eff}}(r) = -\frac kr + \frac {M^2}{2 m r^2}. \end{equation}

In the case of elliptic trajectories \(0<e<1\), despite its look, we can actually solve it with some smart chains of substitutions. Using

\(\seteqnumber{0}{2.}{131}\)\begin{equation} M = \sqrt {kmp}, \quad E = -k \frac {1-e^2}{2p}, \quad \mbox {and}\quad p= a(1-e^2), \end{equation}

and then the substitution

\(\seteqnumber{0}{2.}{132}\)\begin{equation} \label {eq:rkp} r - a = - a e \cos \theta , \end{equation}

the integral becomes

\(\seteqnumber{0}{2.}{133}\)\begin{align} t & = \sqrt {\frac {ma}k} \int \frac {r\dd r}{\sqrt {-r^2 + 2ar -ap}} \\ & = \sqrt {\frac {ma}k} \int \frac {r\dd r}{\sqrt {a^2e^2 - (r-a)^2}} \\ & = \sqrt {\frac {ma^3}{k}} \int (1- e \cos \theta ) \dd \theta \\ & = \sqrt {\frac {ma^3}{k}} (\theta - e \sin \theta ) + C. \label {eq:tkp} \end{align} By the arbitrariness of the choice of \(t_0\), we can always choose \(C=0\).

Together, (2.133) and (2.137), provide a parametrization of the radial motion:

\(\seteqnumber{0}{2.}{137}\)\begin{equation} \left \lbrace \begin{aligned} r & = a(1-e\cos \theta ) \\ t & = \sqrt {\frac {ma^3}k}(\theta - e\sin \theta ) \end {aligned} \right .. \end{equation}

Given that \(\theta \mapsto r(\theta )\) is \(2\pi \) periodic, we can immediately obtain the period from \(t(\theta )\):

\(\seteqnumber{0}{2.}{138}\)\begin{equation} T = 2\pi \sqrt {\frac {ma^3}k}. \end{equation}

To rewrite the orbits in cartesian coordinates, \(x = r \cos \phi \), \(y=r \sin \phi \), we can use the two representations for \(r\)

\(\seteqnumber{0}{2.}{139}\)\begin{equation} \frac {p}{1+e\cos \phi } = r = a(1-e\cos \theta ) \end{equation}

to derive

\(\seteqnumber{0}{2.}{140}\)\begin{equation} \cos \phi = -\frac {e - \cos \theta }{1-e \cos \theta },\quad \sin \phi = \frac {\sqrt {1-e^2}\;\sin \theta }{1-e\cos \theta }, \end{equation}

which, in turn, imply the parametrization in cartesian coordinates

\(\seteqnumber{0}{2.}{141}\)\begin{equation} \left \lbrace \begin{aligned} x & = a(\cos \theta - e) \\ y & = a\sqrt {1-e^2}\;\sin \theta \\ t & = \sqrt {\frac {ma^3}k}(\theta - e\sin \theta ) \end {aligned} \right .. \end{equation}

-

Exercise 2.8. Obtain the following parametrization for the hyperbolic motion for \(E>0\).

\(\seteqnumber{0}{2.}{142}\)\begin{equation} \left \lbrace \begin{aligned} x & = a(e- \cosh \xi ) \\ y & = a\sqrt {e^2-1}\;\sinh \xi \\ t & = \sqrt {\frac {ma^3}k}(e\sinh \xi -\xi ) \end {aligned} \right .. \end{equation}

-

Exercise 2.9. Obtain the following parametrization for the parabolic motion for \(E=0\).

\(\seteqnumber{0}{2.}{143}\)\begin{equation} \left \lbrace \begin{aligned} x & = \frac p2 \left (1- \eta ^2\right ) \\ y & = p\eta \\ t & = \sqrt {\frac {mp^3}k}\frac \eta 2\left (1+\frac {\eta ^2}3\right ) \end {aligned} \right .. \end{equation}

It is possible to obtain an explicit dependence on time with some extra effort by expanding the trigonometric terms in terms of Bessel functions. This is rather useful when studying certain type of orbit-orbit or spin-orbit resonances, but we will not investigate it further in this course. For additional information you can refer to [AKN06; Arn10].

-

Exercise 2.10. Let \(0<e<1\) and \(\tau = \theta - e\sin \theta \). Show that \(\sin \theta \) and \(\cos \theta \) can be expanded in Fourier series as

\(\seteqnumber{0}{2.}{144}\)\begin{align} \sin \theta & = \frac 2e \sum _{n=1}^\infty \frac {J_n(ne)}{n} \sin (n\tau ), \\ \cos \theta & = -\frac e2 + 2 \sum _{n=1}^\infty \frac {J'_n(ne)}{n} \cos (n\tau ), \end{align} where \(J_n(x)\) are the Bessel functions of the first kind

\(\seteqnumber{0}{2.}{146}\)\begin{equation} J_n(x) = \sum _{j=0}^\infty (-1)^j\frac {}{j!(j+n)!}\left (\frac z2\right )^{2j+n}, \quad n\in \mathbb {N}. \end{equation}

Hint: use the integral representation

\(\seteqnumber{0}{2.}{147}\)\begin{equation} J_n(z) = \frac 1\pi \int _0^\pi \cos \left (z\sin \phi - n \phi \right )\dd \phi ,, \quad n\in \mathbb {N}. \end{equation}

In Example 1.2, we mentioned a third conserved quantity for the Kepler problem.

-

Exercise 2.12. Consider a small perturbation of the gravitational potential \(U(r) = -\frac kr\) of the form

\(\seteqnumber{0}{2.}{149}\)\begin{equation} U(r) \mapsto U(r) + \epsilon \frac 1{r^2}. \end{equation}

Use (2.122), to compute a linear approximation in \(\epsilon \) of the perturbation \(\delta \phi \) of the perihelion of the elliptic orbit.

2.5 D’Alembert principle and systems with constraints

In most of the examples above, we have started by assuming that each particle is free to roam in \(\mathbb {R}^3\). However, in many instances this is not the case. In this section we will briefly investigate how Lagrangian mechanics deals with these cases. For a different take on this problem, refer to [Arn89, Chapter 21].

If a free point particle living on a surface \(M\) in \(\mathbb {R}^3\) was evolving according to the free euclidean Newton’s law (without any potential), the particle would generally move in a straight line and not stay confined on the surface. From the point of view of Newton, this means that there should be a virtual force that keeps the particle constrained to the surface. Of course, constraints could be more general than this.

Let’s try again. Consider now a natural lagrangian (1.59)

\(\seteqnumber{0}{2.}{150}\)\begin{equation} L(\vb *{x}, \dot {\vb *{x}}) = \sum _{k=1}^N \frac {m_k \|\dot {\vb *{x}}_k\|^2}{2} - U(\vb *{x}_1, \ldots , \vb *{x}_N) \end{equation}

whose point particles are forced to satisfy a number of holonomic constraints, i.e., relationships between the coordinates of the form

\(\seteqnumber{0}{2.}{151}\)\begin{equation} \label {eq:holonomic} f_1(\vb *{x}_1, \ldots ,\vb *{x}_N) = 0, \;\ldots ,\; f_l(\vb *{x}_1, \ldots ,\vb *{x}_N) = 0 \end{equation}

where \(f_i : \mathbb {R}^{3N}\to \mathbb {R}\) smooth, \(i=1,\ldots ,l\).

-

Remark 2.9. We call the constraints holonomic if the functions that define them do not depend on the velocities (they can depend on time, their treatment is analogous to what we will do in this section). More general constraints, also taking into account the velocities, are called non holonomic and will not be treated in this course.

Hamilton’s principle is then applied to curves which satisfy the relations (2.152), and whose fixed end points satisfy (2.152).

D’Alembert principle states that the evolution of a mechanical system with constraints can be seen as the evolution of a closed system in the presence of additional forces called constraint forces.

To be more precise, assume that the \(l\) equations (2.152) are independent, i.e., that they define a smooth submanifold \(Q\subset M\) of the configuration space \(M=\mathbb {R}^{3N}\) with maximal dimension \(\dim Q = 3N-l\). The tangent space \(T_qQ \subset \mathbb {R}^{3N}\) is a linear subspace of the ambient tangent space \(T_q \mathbb {R}^{3N}\) and its orthogonal complement is a linear subspace of dimension \(l\) spanned by the gradients of the functions \(f_1, \ldots , f_l\). For a brief but more comprehensive discussion of this you can look at [Ser20, Chapter 2.8].

-

Theorem 2.11. The equations of motion of a mechanical system of \(N\) particles with natural lagrangian (1.59) under the effect of \(l\) independent holonomic constraints (2.152) have the form

\(\seteqnumber{0}{2.}{152}\)\begin{equation} \label {eq:generalnewtonconstraint} m_k \ddot {\vb *{x}}_k = - \frac {\partial U}{\partial \vb *{x}_k} + \vb *{R}_k, \quad k=1, \ldots , N, \end{equation}

where the constraint force \(\vb *{R} = (\vb *{R}_1, \ldots , \vb *{R}_N)\) belongs to the orthogonal subspace to (the tangent space at \(\vb *{x}\) of) \(Q\).

-

Proof. To deal with constraints in the lagrangian formulation, we introduce \(l\) new variables \(\lambda _1, \ldots , \lambda _l\), called Lagrange multipliers7, and define a new lagrangian

\(\seteqnumber{0}{2.}{153}\)\begin{equation} \widetilde L(\vb *{x}, \dot {\vb *{x}}, t) = L(\vb *{x}, \dot {\vb *{x}}) + \sum _{j=1}^l \lambda _j f_j(\vb *{x}). \end{equation}

The evolution will be determined by the critical points for the corresponding action functional \(\widetilde S\).

Since \(\widetilde L\) does not depend on \(\dot \lambda \), the Euler-Lagrange equations for \(\lambda \) are just

\(\seteqnumber{0}{2.}{154}\)\begin{equation} \frac {\partial \widetilde L}{\partial \lambda _j} = f_j(\vb *{x}) = 0,\quad j=1,\ldots ,l, \end{equation}

which gives us back the constraints defining the submanifold \(Q\).

The other equations of motion, on the other hand, take the form

\(\seteqnumber{0}{2.}{155}\)\begin{equation} \label {eq:newtconstr} m_k \ddot {\vb *{x}}_k = -\frac {\partial U}{\partial \vb *{x}_k} + \sum _{j=1}^l \lambda _j \frac {\partial f_j(\vb *{x})}{\partial \vb *{x}_k}, \quad k=1,\ldots ,N. \end{equation}

For all \(j\), the vectors \(\frac {\partial f_j(\vb *{x})}{\partial \vb *{x}_k}\) are orthogonal8 to (the tangent space at \(\vb *{x}\) of) \(Q\) and thus also their linear combinations \(\vb *{R}\) are, where

\(\seteqnumber{0}{2.}{156}\)\begin{equation} \vb *{R}_k := \sum _{j=1}^l \lambda _j \frac {\partial f_j(\vb *{x})}{\partial \vb *{x}_k}, \quad k=1,\ldots ,N. \end{equation}

□

7 For a brief introduction on their meaning, I recommend this twitter thread or this YouTube video.

8 Since \(f(\vb *{x}) = 0\), for any curve \(\vb *{x}:\mathbb {R}\to Q\) we have \(0 = \frac {d}{dt} f(\vb *{x}) = \langle \frac {\partial f(\vb *{x})}{\partial \vb *{x}}, \dot {\vb *{x}} \rangle \).

-

Remark 2.10. The theorem above is often stated as follows: in a constrained system, the total work of the constraint forces on any virtual variations, i.e., vectors \(\delta \vb *{x}\) tangent to the submanifold \(Q\), is zero. One can understand this by observing that for such tangent vectors

\(\seteqnumber{0}{2.}{157}\)\begin{equation} \label {eq:orthogonalconstraint} \sum _{k=1}^N \left \langle \vb *{R}_k, \delta \vb *{x}_k\right \rangle = 0. \end{equation}

Equations (2.153) and (2.158) fully determine the evolution of the constrained system and the constraint forces.

Constrained motions have also a nice intrinsic description in terms of a mechanical system on \(TQ\). Indeed, introducing local coordinates \(q= (q_1, \ldots , q_n)\in Q\), where \(n = 3N-l\), the substitution \(\vb *{x}_k = \vb *{x}_k(q)\), \(k=1,\ldots ,N\) immediately implies the following theorem.

-

Theorem 2.12. The evolution of of a mechanical system on \(N\) particles with natural lagrangian (1.59) under the effect of \(l\) independent holonomic constraints (2.152) can be described by mechanical system on \(TQ\) with lagrangian

\(\seteqnumber{0}{2.}{158}\)\begin{equation} L_Q(q,\dot q) = \frac 12 g_{ij}(q)\dot q^i \dot q^j - U(\vb *{x}_1(q),\ldots ,\vb *{x}_N(q)), \quad n = 3N-l, \end{equation}

where

\(\seteqnumber{0}{2.}{159}\)\begin{equation} g_{ij}(q) = \sum _{k=1}^N m_k\left \langle \frac {\partial \vb *{x}_k}{\partial q^i},\frac {\partial \vb *{x}_k}{\partial q^j}\right \rangle \end{equation}

is the riemannian metric induced by the euclidean metric on \(Q\) given by \(ds^2 = \sum _{j=1}^N m_j\langle \dd {\vb *{x}_j}, \dd {\vb *{x}_j}\rangle \).

This can be further generalized to mechanical systems which are constrained on the tangent bundle \(TM\) of a manifold \(M\) of dimension \(m\). In such case, let \(L=L(x,\dot x)\) be a non degenerate lagrangian on \(TM\), and consider a smooth submanifold \(Q\subset M\) of dimension \(n\) with local coordinates \((q^1,\ldots ,q^n)\). Assume that \(Q\) is regular with respect to the lagrangian, i.e., such that the restriction of \(\left (\frac {\partial ^2 L}{\partial \dot x^i \partial \dot x^j}\right )_{i,j=1,\ldots ,m}\) to \(Q\) is still non degenerate:

\(\seteqnumber{0}{2.}{160}\)\begin{equation} \det \left (\frac {\partial ^2 L}{\partial \dot x^i \partial \dot x^j}\frac {\partial x^i}{\partial q^k}\frac {\partial x^j}{\partial q^l}\right )_{k,l=1,\ldots ,n} \neq 0. \end{equation}

As before we can define the constrained motion by minimizing the corresponding action restricted on the space of curves in \(Q\) with the appropriate fixed endpoints in \(Q\). Due to the immersion of \(Q\) into \(M\), which in coordinates reads \(x^1 = x^1(q)\), \(\ldots \), \(x^m=x^m(q)\), and the corresponding immersion of tangent bundles, we can define the restriction of the lagrangian \(L\) on the subbundle \(TQ\):

\(\seteqnumber{0}{2.}{161}\)\begin{equation} \label {eq:dalembertmanifold} L_Q(q,\dot q) = L\left (x(q), \frac {\partial x}{\partial q}\dot q\right ). \end{equation}

-

Exercise 2.13. Show that the equations of motion of the constrained motion on \(Q\) are given by the Euler-Lagrange equations of the lagrangian (2.162).

-

Example 2.8. Every submanifold \(Q\subset \mathbb {R}^m\) of the euclidean space is regular with respect to the free lagrangian

\(\seteqnumber{0}{2.}{162}\)\begin{equation} L = \frac 12 \sum _{k=1}^m \dot x^2_k. \end{equation}

The lagrangian \(L_Q\) of the constrained motion has the form

\(\seteqnumber{0}{2.}{163}\)\begin{equation} L_Q = \frac 12 \sum _{i,j=1}^n g_{ij}(q)\dot q^i\dot q^j, \qquad g_{ij}(q) = \sum _{k=1}^m \frac {\partial x_k}{\partial q^i}\frac {\partial x_k}{\partial q^j}. \end{equation}

Here \(g_{ij}\) is the riemannian metric induced on \(Q\) by the euclidean metric \(\dd s^2 = \dd x_1^2 + \cdots + \dd x_m^2\). As we have already seen, the evolutions corresponding to \(L_Q\) are the geodesics of such metric.

-

Exercise 2.14. Consider the lagrangian \(L=\frac 12 g_{ij}(q)\dot q^i \dot q^j\) on the tangent bundle of a riemannian manifold \(Q\) with a metric \(\dd s^2 = g_{ij}(q)\dd q^i \dd q^j\). Show that the solutions \(q=q(t)\) of the Euler-Lagrange equations for \(L\) satisfy \(\langle \dot q, \dot q\rangle = \mathrm {const}\).

-

Exercise 2.15 (Clairaut’s relation). Given a function \(f(z): [a,b] \to \mathbb {R}_+\), consider the surface of revolution in \(\mathbb {R}^3\) implicitly defined by

\(\seteqnumber{0}{2.}{164}\)\begin{equation} \sqrt {x^2 + y^2} = f(z). \end{equation}

Show that a curve is a geodesic on the surface if and only if it satisfies the following property: let \(\phi \) be the angle that the geodesics makes with the parallel \(z=\mathrm {const}\), then \(f(z)\cos \phi = \mathrm {const}\).

-

Example 2.9 (Double pendulum revisited). Let’s see if we can re-derive the double pendulum lagrangian using D’Alembert principle. We have a system of two point masses with masses \(m_1\) and \(m_2\) connected by massless rigid rods: one of length \(l_2\) connecting them to each other and one of length \(l_1\) connecting the first to a pivot on the ceiling. We assume that frictions are absent and that the double pendulum is constrained to move in a single plane.

A priori the position of the two masses is represented by a six dimensional vector \(\vb *{x} = (x_1, y_1, z_1, x_2, y_2, z_2)\in \mathbb {R}^6\), with the additional constraints

\(\seteqnumber{0}{2.}{165}\)\begin{align} f_1(\vb *{x}) & = y_1 = 0, \\ f_2(\vb *{x}) & = y_2 = 0, \\ f_3(\vb *{x}) & = x_1^2 + z_1^2 - l_1^2 = 0, \\ f_4(\vb *{x}) & = (x_2-x_1)^2 + (z_2-z_1)^2 - l_2^2 = 0, \end{align} representing the planar motion and the rods restrictions.

The number of degrees of freedom is then \(n = 6 -4 = 2\), and the two last equations should suggest that a good choice of coordinates would be the pair of angles describing the displacement of the mass from the respective joint vertical, as we did in Example 1.11.

-

Exercise 2.16. Use D’Alembert principle to compute the constraint force acting on the spherical pendulum9 under the effect of the gravitational potential.

Assume, then, that the constraint force can act only radially outward. Imagine, for example that the point particle is a pebble set in motion on a frictionless and impenetrable ball of dry ice at rest. When and where will the particle leave the sphere?

9 Section 2.2.